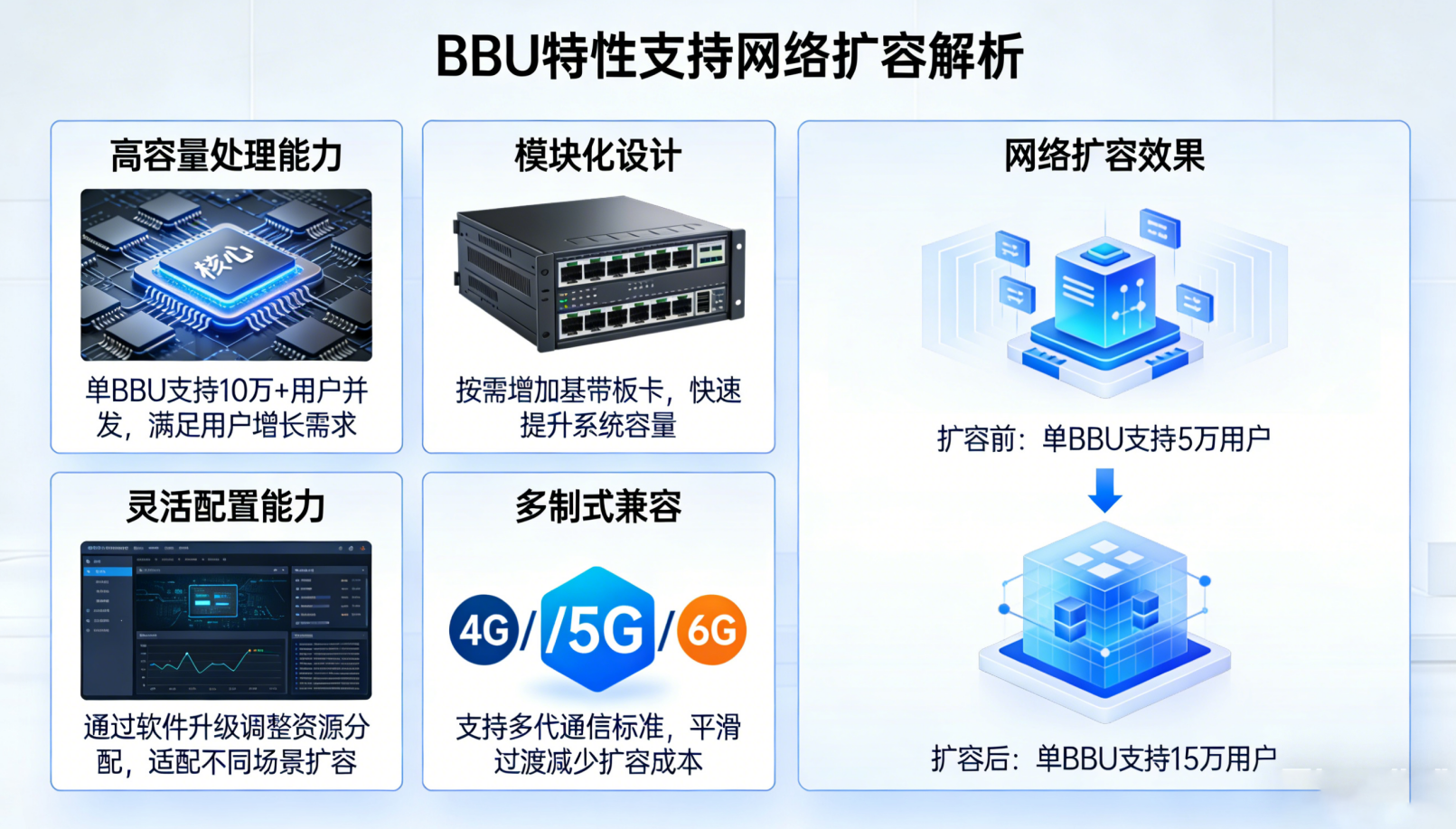

Modular BBU Hardware Design for Vertical and Horizontal Scaling

Modern communication equipment relies on Baseband Unit (BBU) architectures that support two complementary scaling strategies: vertical scaling—enhancing individual units with additional processing power—and horizontal scaling—deploying more BBU nodes across the network to distribute load and increase capacity.

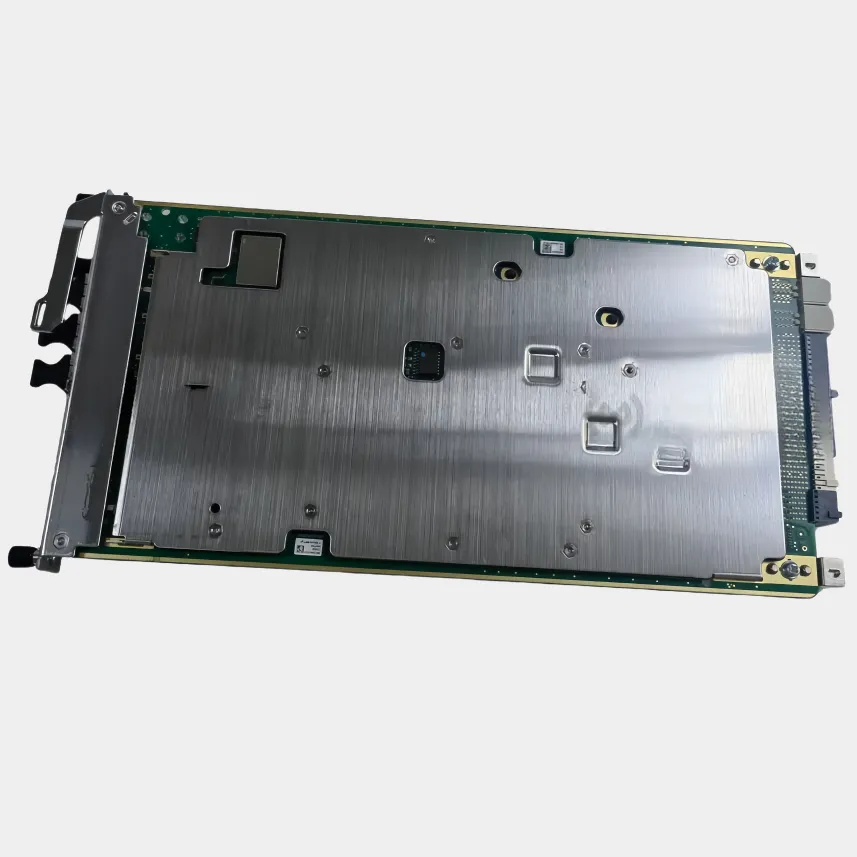

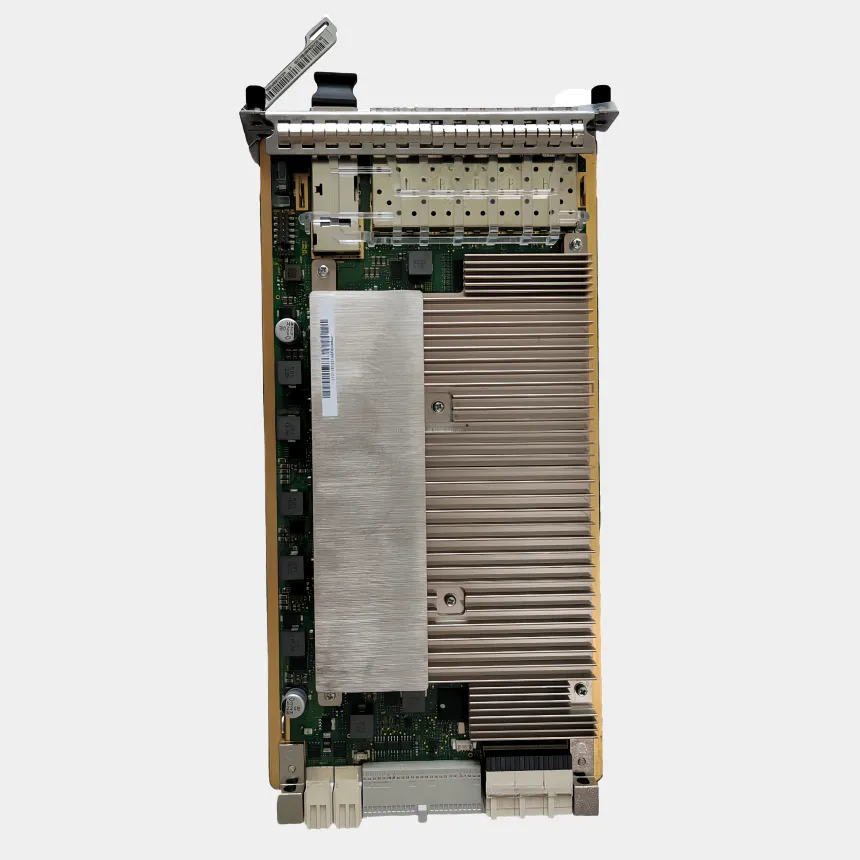

Hot-Swappable Modules and Chassis Flexibility for Incremental Capacity Growth

Operators can now swap out or install hardware components like processor cards and radio interface units without stopping services thanks to field replaceable modules that support hot swapping. The advantage? Quick capacity expansion when needed. Just plug in a fresh processor card and watch throughput jump around 40% almost instantly. Modern chassis designs come equipped with standard slots and adaptable backplane systems that work with all sorts of different modules including things like encryption accelerators and fronthaul interfaces. This flexibility means companies can build exactly what they need without getting stuck with one vendor's equipment, plus it saves valuable rack space. Industry tests have shown that these hot swap features cut down on maintenance downtime by roughly 90% compared to older systems where everything was fixed in place from day one.

CPU/FPGA/GPU Expansion and Memory Bandwidth Optimization for Compute-Intensive Workloads

Modern Baseband Units (BBUs) need to handle both 5G-Advanced requirements and Open RAN specifications, so they bring together different types of computing power. For example, Field Programmable Gate Arrays (FPGAs) handle those ultra-fast signal processing tasks where every microsecond counts. Graphics Processing Units (GPUs) step in when it comes to making AI work for things like beamforming and dealing with interference issues. And then there are multi-socket Central Processing Units (CPUs) managing all the control plane operations and orchestrating everything behind the scenes. When it comes to memory, manufacturers are turning to DDR5 and HBM3 technologies which can push data around at speeds exceeding 1 terabyte per second. This kind of bandwidth is absolutely essential for supporting large scale Multiple Input Multiple Output (MIMO) systems and keeping up with real time fronthaul processing demands. Some clever optimizations make this possible too - like dividing up cache space so critical baseband functions don't get bogged down, allocating memory smartly across different sockets using NUMA principles, and built-in hardware compression that cuts down on fronthaul traffic by about 35%. All these components working together maintain latency below 5 milliseconds while delivering rock solid 5G New Radio performance even when cells sites are handling constant data flows of 200 gigabits per second.

BBU Integration with Cloud-Native and Programmable Networking (SDN/NFV)

Control-Plane Decoupling and Dynamic Orchestration via SDN-Enabled BBU Management

Software Defined Networking or SDN changes how we manage baseband units by separating the control functions from the actual data processing. This creates a system where smart controllers handle most of the thinking centrally, but lets the BBUs themselves make local decisions about forwarding data and managing radio resources. With open application programming interfaces available, network operators can now tweak spectrum allocation on the fly, switch between different modulation methods as needed, and shift workload balance between cell sectors based on what's happening in real time traffic. When things get busy during rush hour periods, these SDN systems kick into action almost instantly, redirecting network capacity away from overloaded areas without needing anyone to manually configure settings. The result? Less downtime and fewer headaches for technicians. A recent industry report from 2024 showed that companies adopting this method typically see around a third reduction in their overall network management expenses when compared to older approaches that relied heavily on individual devices.

Virtualized Baseband Functions and Automated Lifecycle Management with NFV

Network Functions Virtualization, or NFV for short, is changing how telecom companies operate their infrastructure. Instead of relying on expensive proprietary BBU hardware, operators now run baseband functions using regular off-the-shelf servers. Things like signal processing, channel coding, and those Layer 2 protocols all work as lightweight virtual network functions or cloud-native alternatives. The whole system gets managed automatically through platforms such as Kubernetes and ONAP, which take care of everything from setting things up to scaling operations when needed, fixing problems, and applying patches all from centralized dashboards. When traffic suddenly spikes, these NFV systems can quickly create copies of virtual BBUs and spread them across different server groups. And when demand drops off, they just shut down unused resources to save power. According to results from last year's Cloud RAN Benchmark study, this flexible approach cuts capital costs by around half while still maintaining near-perfect uptime at 99.999%. What makes NFV really stand out though is how fast updates roll out. Companies can push new features to thousands of locations within minutes rather than waiting weeks, which means faster innovation cycles without interrupting services for customers.

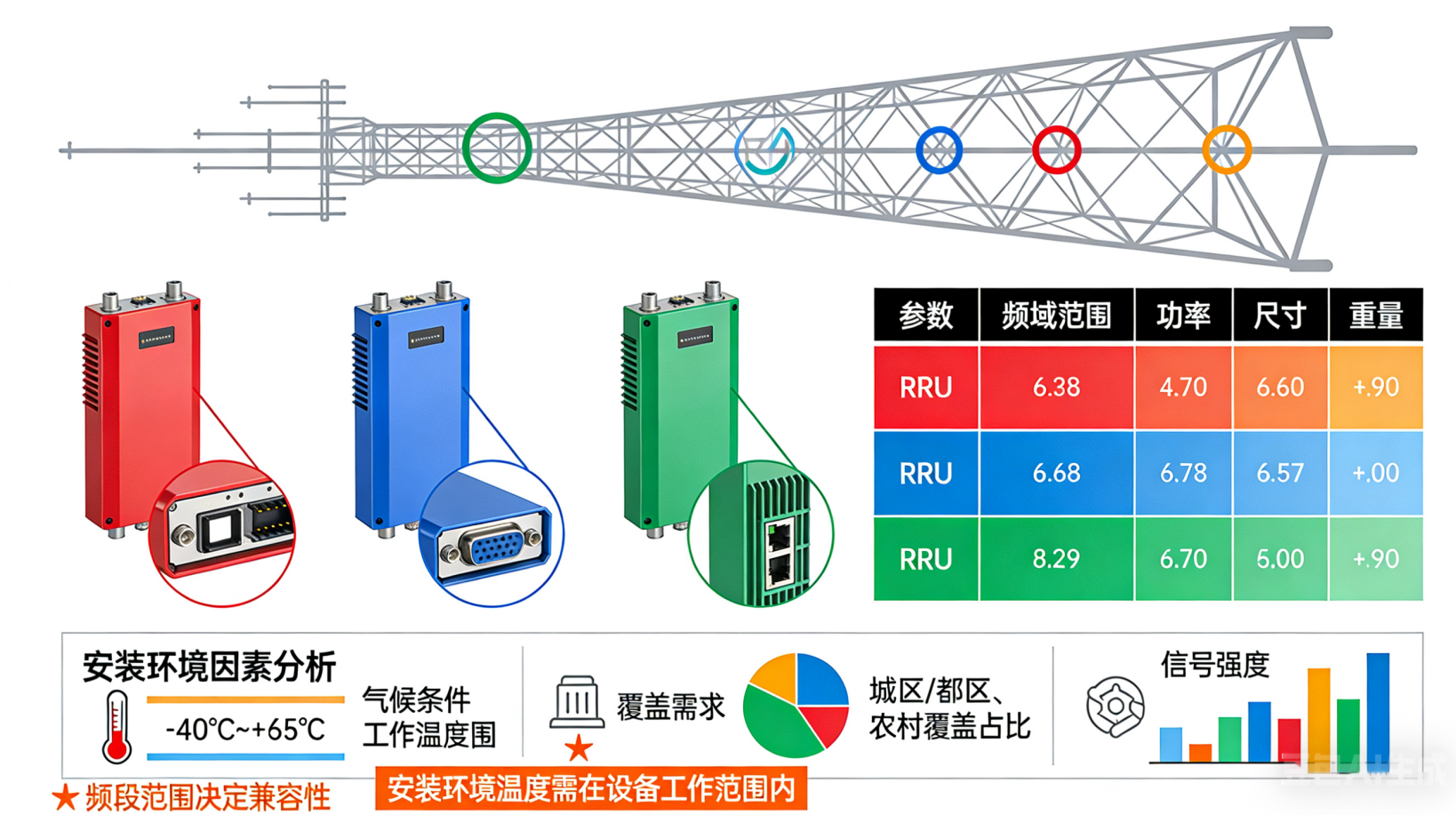

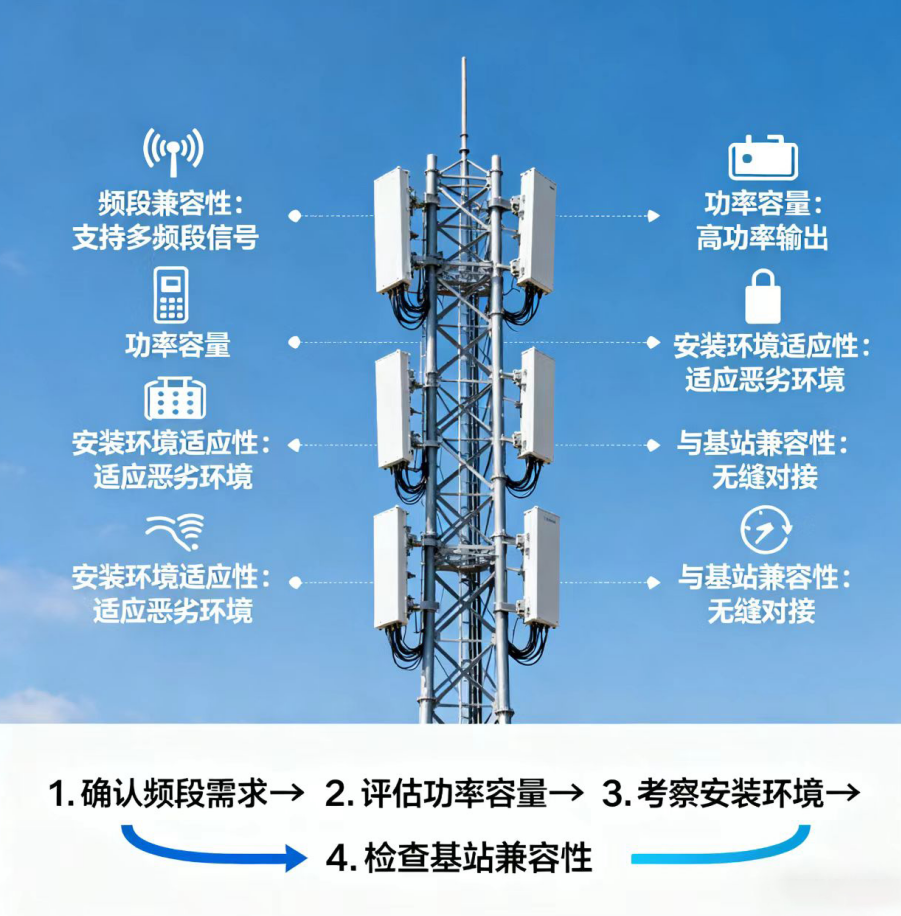

Deployment-Aware BBU Form Factors and Infrastructure Alignment

Sidecar vs. Rack-Mounted BBU Configurations for Distributed and Centralized vRAN

Choosing the correct BBU form factor matters a lot when matching hardware design to how things get deployed and what limitations exist in infrastructure. Sidecar BBUs are these small, energy efficient units that sit right next to antennas on site. They cut down on signal delay which makes them great for applications needing super reliable connections with minimal lag time like URLLC services or edge computing tasks in spread out vRAN setups. On the flip side, rack mounted BBUs bring all that baseband processing together at central hubs. This approach cuts down on space needed sometimes around 40% and makes managing heat, backup power supplies, and routine checks much easier too. Most network providers go for rack mounted versions because they scale better and allow sharing resources between different areas. But don't forget about sidecars either! They still play a critical role where there's limited room available or in those hard to reach spots. Either way works well with SDN and NFV technologies so everything connects smoothly within modern cloud based networks.

AI-Driven Resource Optimization Across Scalable BBU Clusters

Artificial intelligence has changed how we manage BBU clusters, moving them away from just reacting to problems toward being proactive and adaptable. Real time key performance indicators like traffic levels, how many users are connected at once, how efficiently spectrum is used, and hardware monitoring data feed into machine learning systems. These systems can predict what capacity will be needed as far as 48 hours out, which then automatically scales up or down the virtual baseband functions as needed. Special reinforcement learning techniques keep improving how computing power gets distributed between different parts of the network, along with managing bandwidth and power settings. This approach cuts down on wasted energy by about 22% because it smartly powers off equipment that isn't being used much. When it comes to balancing workloads across servers, automation helps boost overall usage rates by around 30%. This makes expanding BBU pools much easier as 5G traffic continues growing. What we end up with is essentially an infrastructure that fixes itself. It stops companies from buying too much gear upfront, keeps latency below 5 milliseconds for important applications, and handles unexpected spikes in traffic without anyone needing to jump in and fix things manually.