Core Function of Optical Transceivers in High-Speed Networks

Electrical-to-Optical Conversion and Signal Integrity Preservation

Optical transceivers act as the go-between for electrical network gear and those thin strands of glass we call optical fibers. These little workhorses take electrical signals and turn them into actual light pulses through laser diodes, then flip the whole thing around at the other end where photodetectors pick up the light and convert it back to electricity. This two-way street allows us to send massive amounts of data across fiber networks at incredible speeds. Keeping those signals clean and intact matters a lot. That's why manufacturers rely on fancy techniques like PAM4 modulation paired with Digital Signal Processors. These technologies fight against things like signal spread out (dispersion), signal loss (attenuation), and all sorts of weird nonlinear effects that can mess up transmissions. Even at blazing fast 400G speeds and beyond, these systems manage to keep bit errors almost nonexistent. Imagine what our data centers and AI operations would look like without such precise electro-optical engineering. We'd be stuck waiting forever for those big data transfers to complete.

How Wavelength, Data Rate, and Distance Interact to Define Performance

The performance and feasibility of deploying transceivers really come down to three key factors working together wavelength, data rate, and distance. When choosing wavelengths, compatibility with fiber types matters a lot. For shorter distances, 850nm is commonly used with multimode fiber, handling things like 100G over about 100 meters. But for longer runs, engineers turn to 1550nm with single-mode fiber, which can push 400G signals across distances up to around 2 kilometers. As data rates climb from 400G all the way to 800G, there's no getting around needing either coherent optics or those fancy PAM4 signaling techniques. However, this comes at a cost increased power draw and greater vulnerability to issues in the transmission path. The distance factor sets pretty strict limits too. Most 80km connections max out at 200G because of problems with chromatic dispersion and noise levels dropping off. On the flip side, shorter 10km links can actually handle 800G speeds if proper forward error correction (FEC) methods and digital signal processing (DSP) compensation are applied. Real world network designers spend a lot of time balancing these competing demands as they build systems that need to scale and adapt to what the market throws at them over time.

Critical Components Powering Modern Optical Transceivers

Laser Diodes, Photodetectors, and DSPs: Enabling Speed and Accuracy

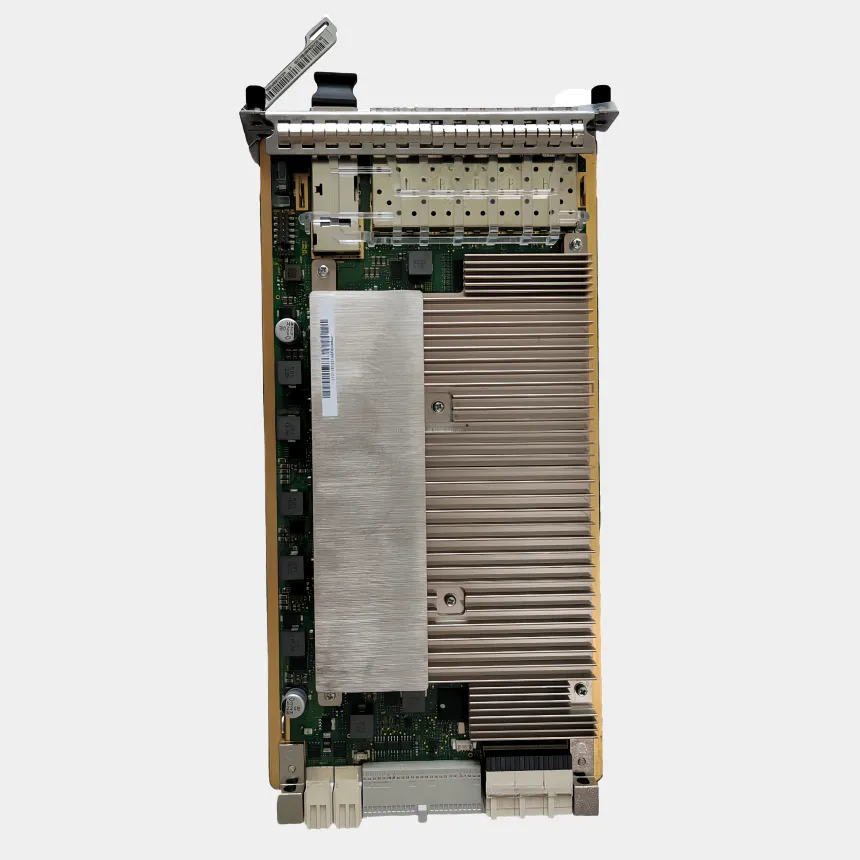

Today's optical transceivers depend on three main parts working together: laser diodes, photodetectors, and those fancy Digital Signal Processors we call DSPs. The laser diodes create these stable, fast optical signals, usually through either distributed feedback technology or newer silicon photonics setups, which helps keep signal loss minimal when sending data through fiber cables. When it comes to photodetectors, most systems use either PIN or avalanche types to turn that incoming light back into clear electrical signals. These detectors need to be really responsive while keeping noise levels down so the data stays intact. Then there are the DSPs doing all sorts of complex tasks behind the scenes like equalizing signals in real time, recovering clock timing, and decoding FEC corrections to fix any issues that happen during transmission. All these components work hand in hand to achieve those amazing sub-1E-15 bit error rates even over distances past 100 kilometers. And let's not forget about deterministic latency requirements that make these systems essential for running modern hyperscale data centers and supporting our growing 5G network infrastructure.

The 400G+ Efficiency Challenge: Balancing Power, Heat, and Bandwidth

Going past the 400G threshold creates serious problems with heat and power consumption. Every time data rates double, power requirements jump around 60 to 70 percent, which packs more heat into those densely packed switch ports. Left unchecked, all this extra heat causes signals to distort, makes components wear out faster, and ultimately cuts down on system reliability. The industry has come up with several approaches to tackle these issues. Some manufacturers are integrating micro-channel heatsinks, others implement adaptive power management systems that can cut energy use by about 30 percent when traffic is light. There's also growing adoption of silicon photonics technology that shortens those long electrical connections between components, reducing both signal loss and heat production. On the materials front, we're seeing improvements too. Lasers made from indium phosphide have better wall plug efficiency compared to traditional options. All these advancements mean modern transceivers can handle up to 400 watts per rack unit while keeping their internal temperatures under 50 degrees Celsius, something that meets the thermal standards set by IEEE and OIF for continuous high speed operations.

Form Factors and Standards: Matching Optical Transceivers to Infrastructure Needs

Selecting the right form factor ensures optimal port density, thermal management, and interoperability across evolving infrastructure. Standardized mechanical and electrical interfaces—from SFP to QSFP-DD—enable plug-and-play compatibility while supporting progressive bandwidth upgrades without full system overhauls.

SFP, QSFP, OSFP, and QSFP-DD — Scaling Density and Speed from 1G to 800G

SFP modules are great for delivering speeds ranging from 1G to 10G in compact form factors that work well in edge networking and access points where space matters. Then we have QSFP versions that pack four lanes together, making them suitable for supporting speeds up to 100G in those densely packed switches found throughout most modern cloud data centers. Looking ahead to what comes next, both OSFP and QSFP-DD formats can handle massive bandwidth requirements of 400G to even 800G thanks to their eight-lane architecture plus better heat management solutions. These newer designs actually double the number of ports per rack unit when compared against older QSFP28 standards. According to recent findings at OFC 2023, this progression has managed to cut down power consumption per gigabit by about 30%, which makes it much easier for companies to upgrade from their existing 100G infrastructure toward these cutting edge 800G systems optimized specifically for artificial intelligence and machine learning workloads.

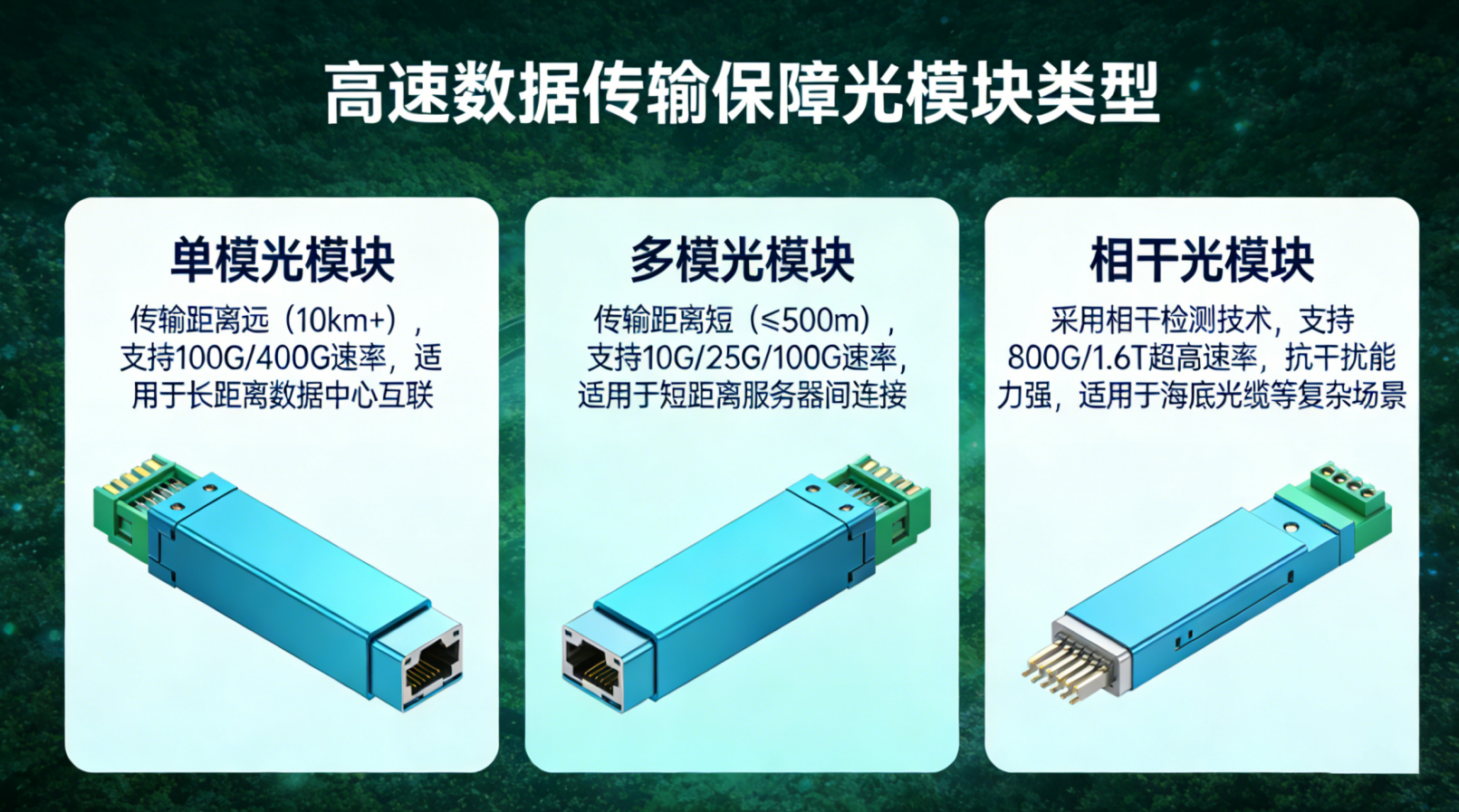

SR, LR, ER, ZR, DR, FR: Decoding Reach Standards for Real-World Deployments

The reach classifications help set what we can expect from different fiber types at various distances. Short Reach or SR works for distances under 300 meters using multimode fiber, which is often found connecting equipment within racks or across campuses. Long Reach (LR) goes further, handling connections up to 10 kilometers through single mode fiber, making it ideal for city wide network setups. Extended Reach (ER) takes things even farther out to around 40 km, while Long Haul (ZR) stretches all the way to 80 km. These longer reaches need stronger lasers and better error correction techniques to work properly in backbone networks and undersea cables. More recently, Data Center Reach (DR) and Fiber Reach (FR) have emerged as specialized categories for modern data centers. DR typically covers 500 meter links between servers in spine leaf architectures, whereas FR provides standardized specifications that work across different fiber types according to IEEE 802.3 guidelines, ensuring compatibility between equipment from different manufacturers.