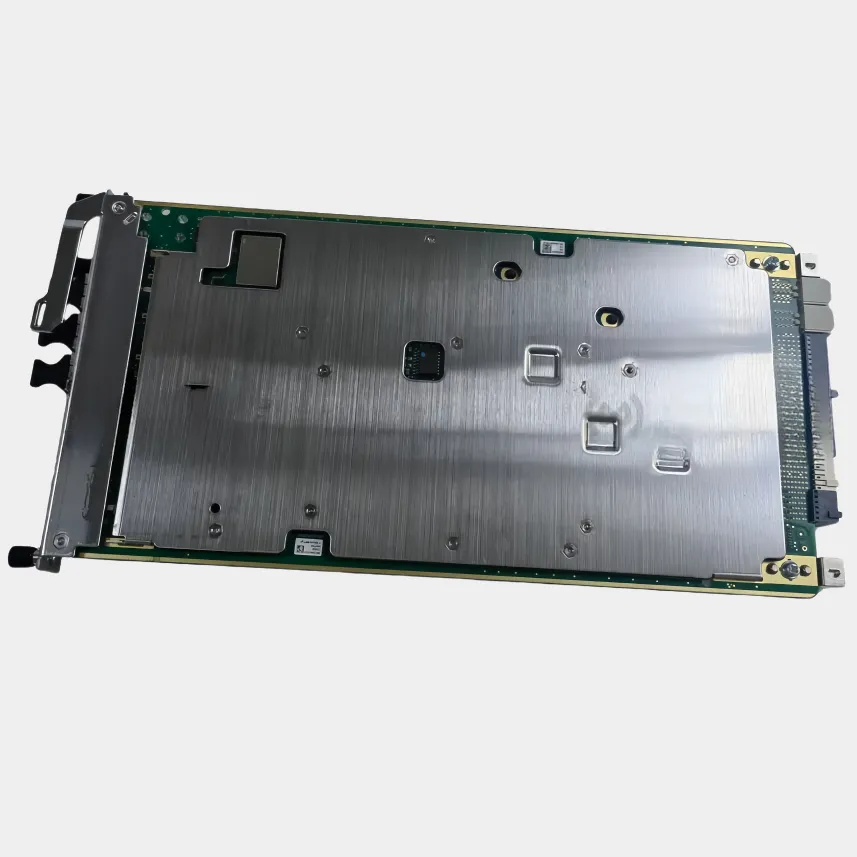

Baseband Unit (BBU) Functions and Responsibilities in Signal Processing

At the heart of modern base stations sits the Baseband Unit (BBU), which handles all sorts of critical signal processing work. Think modulation and demodulation, error correction stuff, plus managing protocols across different layers including PDCP, RLC, and RRC. Before anything gets sent out over the airwaves, this unit makes sure everything matches up with those 3GPP standards that govern both LTE and 5G NR networks. What really makes BBUs stand out though is how they split apart control plane functions from actual data moving through the system. When things like handover management happen separately from regular data flow, it opens up possibilities for smarter resource allocation. Networks can then adapt on the fly when traffic spikes or drops unexpectedly, keeping operations running smoothly even during peak usage times.

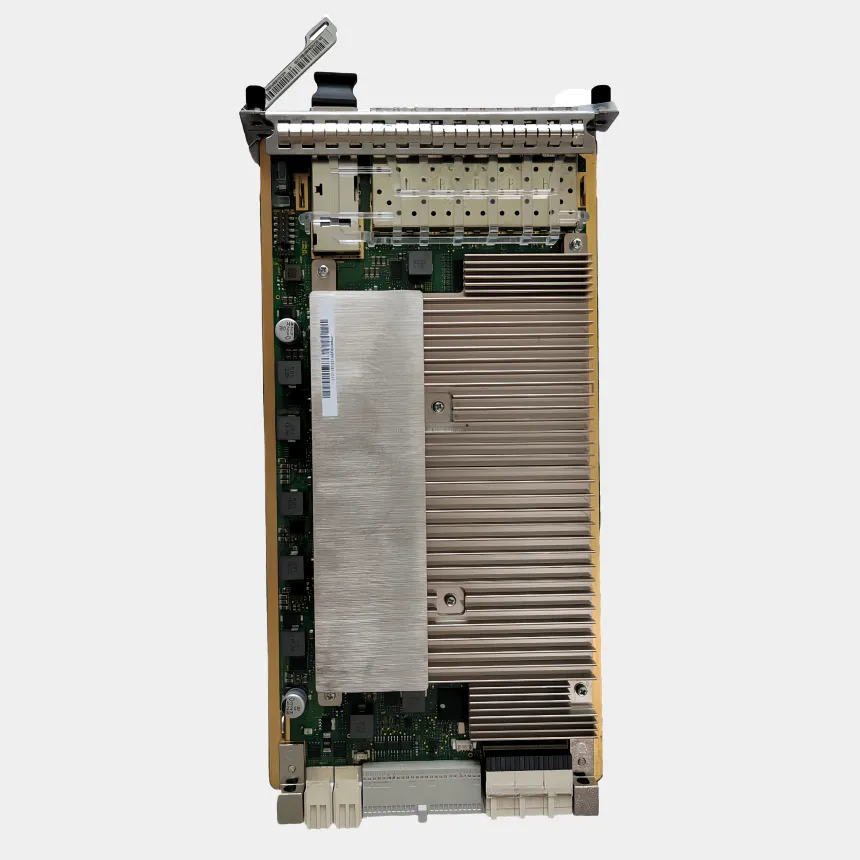

Remote Radio Unit (RRU) Role in RF Conversion and Antenna Interfacing

The Remote Radio Unit (RRU) sits right next to antennas where it transforms those baseband signals into actual radio waves like 2.6 GHz or 3.5 GHz frequencies. This positioning helps cut down on signal loss which can get pretty bad - around 4 dB every 100 meters when using regular coax cables especially at these higher frequency ranges. What does an RRU actually do? Well, it handles converting digital information back to analog form for sending data downstream, boosts weak signals coming back up from devices without adding too much noise, and works with multiple frequency bands from 700 MHz all the way up to 3.8 GHz through something called carrier aggregation. Putting these units close to antennas makes networks respond faster too. Tests show latency drops by about 25% compared to older systems that relied on those long stretches of cable running between equipment locations.

Complementary Workflow: How BBU and RRU Enable End-to-End Signal Transmission

BBU and RRU work together via high-speed fiber links using CPRI or eCPRI protocols to form a seamless signal chain from digital processing to over-the-air transmission.

| Component | Responsibilities | Bandwidth Requirement |

|---|---|---|

| BBU | Baseband processing, resource allocation | 10–20 Gbps per cell |

| RRU | RF transmission, interference mitigation | <1 ms latency threshold |

This distributed architecture centralizes BBUs while placing RRUs at tower tops, improving spectral efficiency by 32% in urban environments. The separation allows independent upgrades and is particularly beneficial in evolving O-RAN ecosystems.

Fiber Based Fronthaul Connectivity: Linking BBU and RRU with CPRI and eCPRI

High Speed Fiber Optic Links in BBU-RRU Communication

Fiber optic cables form the backbone of today's fronthaul networks, allowing for high bandwidth and minimal latency when connecting BBUs to RRUs. These cables can handle data speeds above 25 gigabits per second, which means they reliably carry those digitized radio signals without any issues from electromagnetic interference something that matters a lot in crowded city areas where lots of equipment is running at once. The CPRI standard works alongside bidirectional fiber to keep things synchronized between the baseband processing happening in the BBU and the actual RF work done by the RRU. This synchronization helps maintain good signal quality all the way through the system from start to finish.

CPRI vs. eCPRI: Protocols for Fronthaul Efficiency and Bandwidth Management

As we move toward 5G networks, many operators have started adopting this thing called enhanced CPRI or eCPRI for short. What makes eCPRI interesting is how it cuts down bandwidth requirements by as much as ten times when compared to older versions of CPRI. Traditional CPRI works differently though. It needs separate fiber connections for each antenna and sticks to what's known as Layer 1 operations. But here's the problem: when dealing with those big MIMO setups that are becoming so common these days, regular CPRI just can't scale properly. That's where eCPRI shines. By switching to Ethernet based transport methods, it allows multiple remote radio units to share resources through something called statistical multiplexing. The result? Much better performance in terms of fronthaul efficiency without all the extra infrastructure costs.

| Metric | CPRI (4G Focus) | eCPRI (5G Optimized) |

|---|---|---|

| Bandwidth Efficiency | 10 Gbps per link | 25 Gbps shared pool |

| Latency Tolerance | < 100 μs | < 250 μs |

| Functional Split | Strict Layer 1 | Options 7-2x splits |

This evolution cuts fronthaul costs by 30% and supports scalable millimeter-wave deployments.

Latency, Capacity, and Synchronization Considerations in Fronthaul Design

Getting the timing right matters a lot. If there's a sync error bigger than 50 nanoseconds, it messes up beamforming and all those other time-based functions in 5G networks. That's why modern fronthaul setups use things like IEEE 802.1CM TSN standards to keep control signals moving properly through the network. When it comes to handling data volume, most folks have moved to 25G transceivers these days. They handle signal loss at around 1.5 dB per kilometer, which actually beats out old 10G systems by about two thirds. All these upgrades mean we can still get response times under a millisecond even if baseband units need to be placed as far away as 20 kilometers from remote radio units in central architecture setups.

Network Architecture Evolution: From D-RAN to C-RAN and vRAN

D-RAN vs. C-RAN: Impact on BBU Deployment and RRU Distribution

Traditional Distributed RAN or D-RAN setups have each cell tower housing its own Baseband Unit right next to the Remote Radio Unit. While this keeps signal delay below 1 millisecond, it means lots of duplicate equipment sitting around unused most of the time and makes sharing resources between towers pretty difficult. The newer Centralized RAN approach takes all those BBUs and puts them together in central locations linked through fiber optic cables to the RRUs at different sites. According to industry research from Dell'Oro in their 2023 report, this change can cut down running expenses somewhere between 17% and maybe even close to 25%. Plus, network operators gain the ability to move processing power where it's needed most as traffic patterns change throughout the day.

Centralized BBU Pools in C-RAN for Improved Resource Sharing and Efficiency

By pooling BBUs in centralized facilities, operators can manage hundreds of RRUs from a single location. Benefits include:

- Hardware consolidation: A 24-cell deployment requires 83% fewer BBU chassis than equivalent D-RAN setups

- Energy optimization: Load balancing reduces base station power consumption by 35% (Ericsson case study 2022)

- Advanced coordination: Enables techniques like coordinated multipoint (CoMP) for efficient 5G millimeter-wave beamforming

Virtualized RAN (vRAN): Evolving BBU Functions into Cloud-Based Processing

vRAN decouples baseband processing from proprietary hardware, running virtualized BBU (vBBU) functions on commercial off-the-shelf cloud servers. This shift brings:

- Flexible scaling: Processing resources scale dynamically with traffic patterns

- Edge integration: 67% of operators deploy vBBUs alongside Multi-access Edge Computing (MEC) nodes to minimize latency (Nokia 2023)

- Interoperability challenges: Achieving sub-700 μs synchronization demands specialized acceleration hardware despite vendor diversity

The evolution from D-RAN to C-RAN and vRAN underscores how centralization and virtualization enhance network efficiency, scalability, and cost-effectiveness.

O-RAN and Functional Splits: Redefining BBU-RRU Collaboration

O-RAN Alliance Standards and Open Interface Requirements for BBU and RRU

The O-RAN Alliance is pushing for more open and compatible radio access network designs by setting standard ways for baseband units (BBUs) and remote radio units (RRUs) to talk to each other. What this really means is operators can mix and match equipment from different vendors instead of being locked into one supplier's ecosystem. The alliance has come up with various ways to split functions between these components, such as Option 7.2x. In this setup, things like RLC and MAC layers handle their work in the BBU, whereas lower level physical layer tasks and RF processing happen at the RRU end. A recent paper published last year in Applied Sciences found that this particular configuration keeps fronthaul delays under 250 microseconds, which is pretty impressive considering how sensitive wireless networks are to timing issues. Of course there's a tradeoff here too. While having open standards gives more options when buying gear, it also demands tighter coordination across all the different parts to make sure everything works smoothly together without hurting overall performance.

Functional Split Options (e.g., Split 7-2x) in O-RAN Architectures

The Split 7.2x standard works by splitting processing tasks between different components using two main approaches. With Category A, most of the work happens at the BBU side which makes RRUs simpler but creates more traffic on the front haul connection. On the flip side, Category B pushes those processing tasks down to the RRU itself. This setup gives better performance when dealing with signals coming back from users, though it does make the hardware more complicated. According to recent industry reports, around two thirds of network operators have gone with Category B for their massive MIMO deployments because they get much better control over signal interference. The tech world keeps evolving too. Take the 2023 ULPI project for instance. This new development has been shifting certain equalization functions around within the system architecture to squeeze out even more performance gains across the board. These kinds of improvements are exactly what documents from the O-RAN working groups have been highlighting lately.

Balancing Interoperability and Performance in Open RAN Deployments

O-RAN does bring potential for saving money over time and allows working with different vendors, but getting it to perform as well as traditional integrated RAN systems is still tough work. The problem comes down to differences between what various manufacturers offer in terms of hardware acceleration capabilities and how mature their software actually is. This creates real headaches when trying to hit those important metrics for data throughput and power consumption. When companies set up centralized BBU pools, they need extremely reliable connections on the front end too, something that requires keeping jitter below about 100 nanoseconds according to industry standards. Most experts suggest taking things slow at first, maybe starting with some lower risk locations in cities where problems won't cause major disruptions. This approach lets operators test out whether everything works together properly and meets expectations before going all in across larger areas.